I&C Calibration Frequency Mistakes

Today’s I&C workforce is stretched like never before. I&C shops are being pushed to do more with less, and it can sometimes be a nearly impossible challenge to get all of the necessary work completed properly.

Interestingly, I have observed that much of the work that our I&C personnel are so busy performing provides little value. Much of our PM work schedules and procedures are based on technologies, equipment, and problems that are no longer applicable.

As an example - Is your organization blindly performing annual calibrations of all field transmitters? If so, is there sound reasoning (or a regulatory requirement or some other reason) for this frequency – or is it simply based on maintenance plans that have been blindly carried forward for decades without any actual analysis or justification?

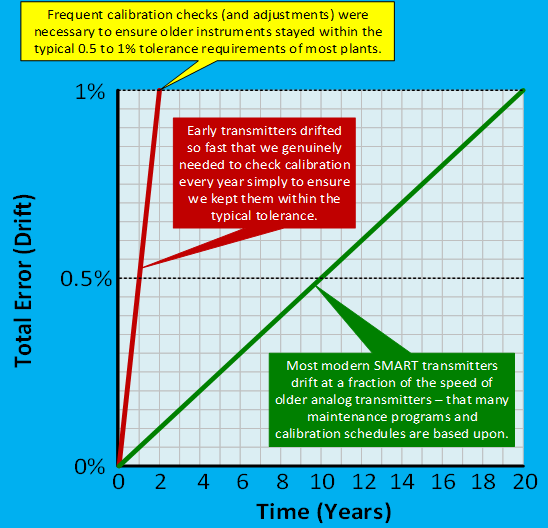

Before the advent of microprocessor based “Smart” transmitters, it was common for typical analog electronic instrumentation transmitters to have drift rates of up to 0.5% per year. Because of these high drift rates, most I&C shops literally had to perform annual calibrations of all instruments just to ensure everything operated within tolerable limits (which were and still are around +/- 0.5 to 1.0% of span per year). See the red line in graph below.

However - nearly all of those old analog transmitters have long since been replaced by much more capable ‘Smart’ transmitters that have dramatically lower drift rates. Modern ‘Smart’ microprocessor based transmitters typically have drift rates that are between 10 to 40 times lower than their analog/electronic instrumentation predecessors. In many cases, it would take decades for a modern 'Smart' transmitter to drift out of a +/- 1% tolerance. See the green line in the figure above.

And yet, many organizations are still expending a major portion of their annual I&C labor hours performing the same annual calibrations of all plant transmitters that were necessary back in the 80's...

Why? - Because very few I&C engineers or technicians have had any detailed training on modern technologies such as Smart, HART, FieldBus, or have stopped to contemplate how these technologies could/should impact their maintenance efforts and very few organizations or textbooks provide this kind of insight in their training.

Interestingly, most instrument reference manuals provide very clear guidance and sample calculations to determine the necessary calibrations frequencies, based on the established statistical drift rates and acceptable site tolerances. In short, the typical approach to calculating the required calibration frequency is to divide the allowable instrument tolerance (typically plus or minus 0.5% or 1.0% of span in most applications) by the expected total drift rate (which is published in typical vendor reference manuals).

For example:

A common pressure transmitter in an application with a +/- 0.5 percent of span tolerance, and a published total stability of 0.0035% of span per month (this is the actual value for one of the most common pressure transmitters used in North America). For this situation the simplified calculation would be as follows:

0.5% / 0.0035% = 111 months (9.25 years)

Because the drift rates are so much lower on newer instrumentation, the frequency of our calibration checks for many instruments could likely be reduced significantly in many situations.

To make things worse, the excessive calibration checks that many organizations are performing are often injecting more errors and problems than they are correcting (more on that topic in a subsequent blog…).

There are certainly situations where it is justifiable and/or necessary to perform calibration checks more frequently than the calculated periodicity, such as; regulatory, fiscal, environmental, or legal requirements, or situations where tighter accuracy truly equates to increased profits or improved safety – but we need to avoid the mistake of blindly carrying forward maintenance plans, schedules, and/or procedures without factoring in equipment and/or technological changes.

Consider a plant with 10,000 transmitters, where the average calibration check including permits, admin, record keeping, and the actual work takes approximately 2 hours per device. That organization would expend 20,000 labor hours per year just to perform these calibration checks. That comes out to (10) full-time technicians doing nothing but calibration checks all year long!).

But, if we were able to extend the calibration-check frequency of those 10,000 transmitters to an average of every 5-years, it would reduce the annual workload for calibration-checks from 20,000 hours down to 4,000 hours per year! That would free up 8 (of the 10) technicians to perform OTHER work that would likely provide much greater improvements in reliability, performance, and overall safety, such as:

- Performing more frequent and more thorough instrument walkdowns and inspections.

- Performing more preventative and corrective maintenance, such as; maintaining instrument air supply systems; cleaning and lubricating moving parts on control valves, solenoids, and actuators; checking adjusting control valve packing properly; checking & tightening field terminals; replacing old/worn/corroded ground wires; repairing worn or damaged cables; correcting improper instrument cable terminations and glands; correcting tubing pockets, traps, and other installation flaws, and many others.

- Properly setting up and/or monitoring more of the (often under-utilized) diagnostic capabilities of modern instrumentation. (This is another huge potential benefit of modern instrumentation that many organizations are still failing to capitalize on - more on this in a future blog...).

As I&C professionals, we need to stay fully educated on technological advances and changes and constantly be thinking about how those changes impact our procedures, schedules, and maintenance and avoid falling into the status-quo trap.

Our Instrumentation & Controls courses cover this topic and many other issues and common problems that are typically missed in training environments and that are widely problematic throughout the I&C industry.

I will be highlighting many other common issues, mistakes, and problems within the I&C field in my future blogs. If you found this article helpful, please subscribe to get regular updates and information on ways to improve the I&C maintenance practices of your organization. You can also reach out to Mike directly per this link.